Joykirat Singh

Chapel Hill, NC

I’m Joykirat Singh, a first-year PhD student in the Computer Science Department at the University of North Carolina at Chapel Hill, where I am advised by Prof. Mohit Bansal.

Previously, I worked at Microsoft Research India as a Research Fellow, under the mentorship of Dr. Akshay Nambi.

My research interests lie in building interpretable AI models with reasoning capabilities. I aim to study how the behavior of large language models (LLMs) emerges as a function of their training data, and how their internal mechanisms evolve or emerge during training. I am particularly interested in understanding whether LLMs can truly reason and perform long-horizon planning without relying on biased priors or superficial pattern recognition. Additionally, I want to develop AI systems that enhance their reasoning capabilities while minimizing their dependence on shallow patterns learned during pre-training.

I graduated in 2023 with a B.Tech in Computer Science and Design, receiving a silver medal for academic excellence from the Indraprastha Institute of Information Technology (IIIT), Delhi. During my undergraduate studies, I had the opportunity to collaborate with Dr. Md Shad Akhtar.

Previously, I served as a Research Assistant at the Indian Institute of Technology (IIT) Delhi, working alongside Dr. Tanmoy Chakraborty and Dr. Soumen Chakrabarti on various research projects.

news

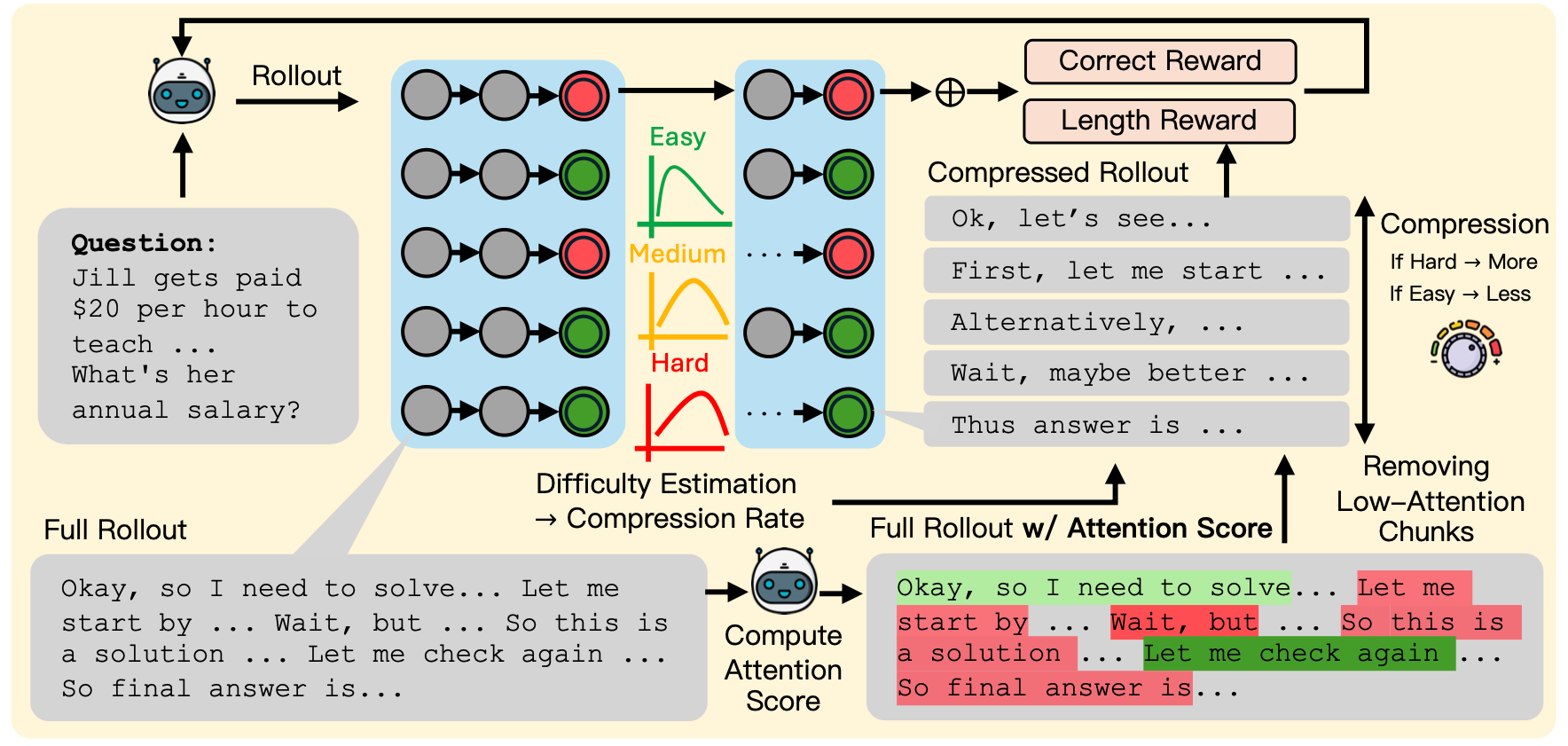

| Oct 02, 2025 | Check out Think Right: Learning to Mitigate Under-Over Thinking via Adaptive, Attentive Compression, our new preprint on arxiv. |

|---|---|

| Aug 20, 2025 | Data-scarce Behavior Editing of Language Models accepted at EMNLP 2025 Findings! |

| Jun 01, 2025 | Joined University of North Carolina at Chapel Hill as a Computer Science PhD Student and will be advised by Prof. Mohit Bansal. I will be working on language models, focusing on reasoning, tool use and interpretability. Excited to be part of the vibrant research community at UNC. |

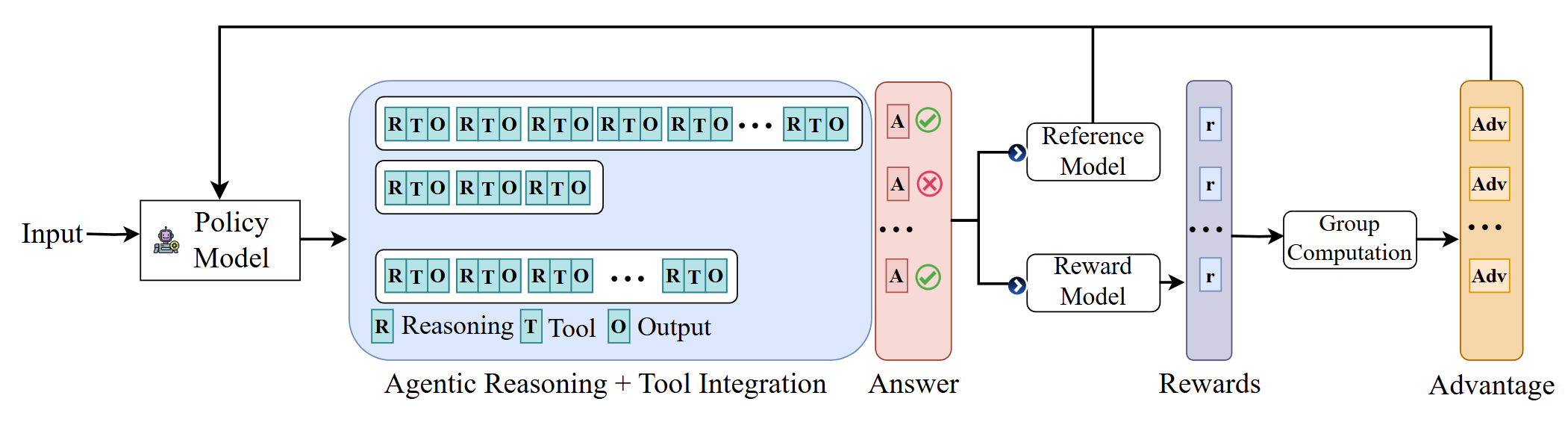

| May 20, 2025 | ARTIST enables models to autonomously decide when, how, and which tools to invoke within multi-turn reasoning chains. Check out the preprint to learn how it enhances reasoning capabilities in language models by leveraging tool invocation strategies. |

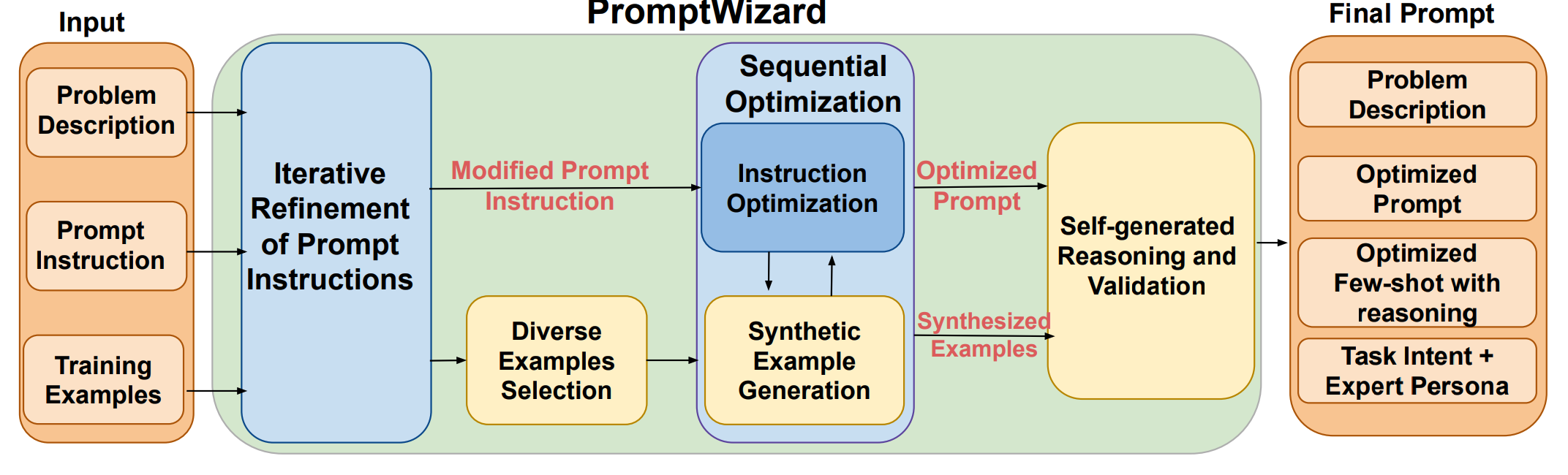

| May 16, 2025 | MWP-MISTAKE and PROMPTWIZARD accepted at ACL 2025 MAINS and ACL 2025 FINDINGS, respectively! |

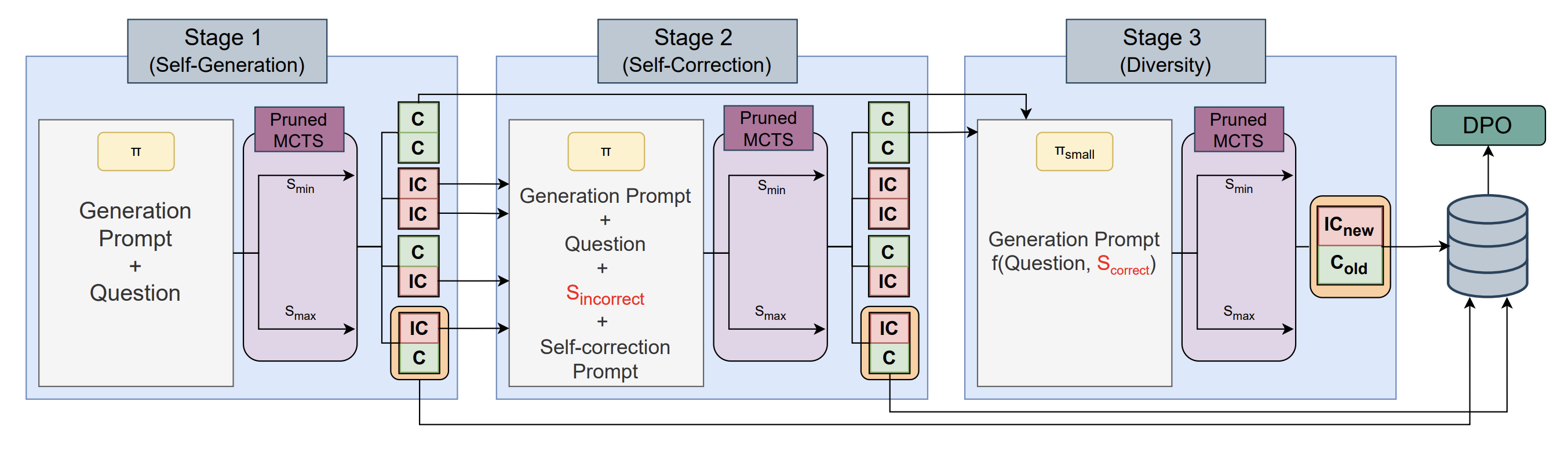

| Mar 04, 2025 | Preprint SPHERE builds a self-evolving data generation pipeline that enhances reasoning in small language models (SLMs) by iteratively generating, correcting, and diversifying reasoning chains. |

| Oct 03, 2024 | Preprint PROMPTWIZARD is out! PromptWizard introduces a novel, fully automated framework for discrete prompt optimization, utilizing a self-evolving, self-adapting mechanism. |

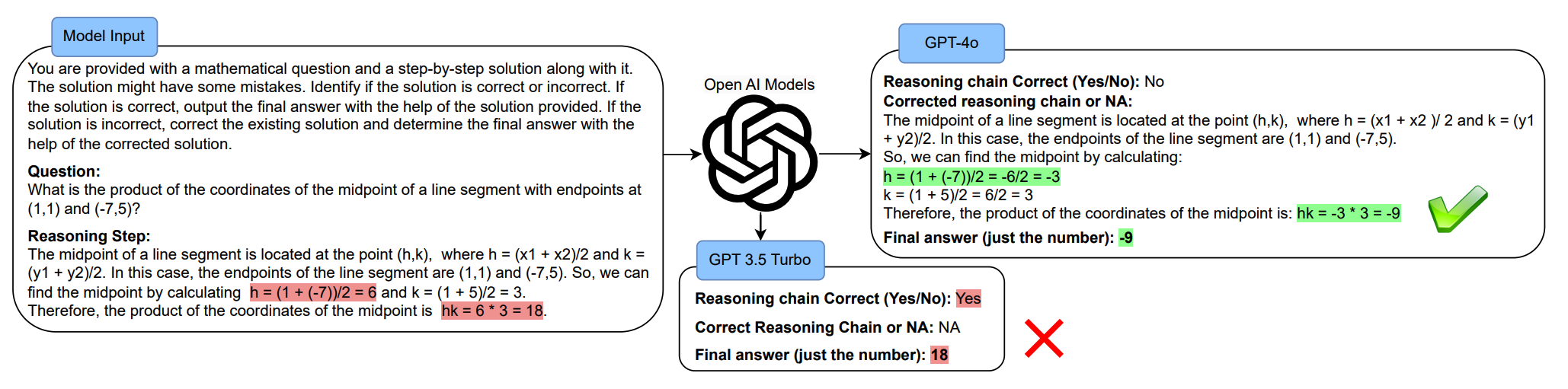

| Jun 16, 2024 | Preprint MWP-MISTAKE on exploring language models mistake detection and their correction capabilities is out. |

| Jun 01, 2024 | Joined Microsoft Research as a Research Fellow under the mentorship of Dr. Akshay Nambi. Excited to explore the fascinating world of Large Language Models and their reasoning capabilities! 🚀 |

Publications

-

Exposing the Achilles’ Heel: Evaluating LLMs Ability to Handle Mistakes in Mathematical Reasoning2024

Exposing the Achilles’ Heel: Evaluating LLMs Ability to Handle Mistakes in Mathematical Reasoning2024 -

PromptWizard: Task-Aware Agent-driven Prompt Optimization Framework2024

PromptWizard: Task-Aware Agent-driven Prompt Optimization Framework2024 -

-